With our company growing and growing we are exploring some new options for faster networking. Sadly 10gbit networking is still way too expensive, network cards, switches and even cables are insanely expensive. It is 20-50 times more expensive than just using cheap 1gbit hardware, especially since every computer has a 1gbit network card on the mainboard anyway.

Since our new server has a pretty big and fast raid we do not want access limited by just 1gbit. Our new raid can read and write data with up to 1 GB/s, which would require a 10gbit network to do this over the network. But since that is too expensive, it is out of the question.

In case you are interested, here are some pics from the old and the new server:

This is the old server (actually one of two), which just had a small Raid-5 plus some extra hard disks. The server was mostly used for backups. It was an older Core2Duo with 2TB storage and we used it not as an file server so much (that's what the other server did), but for database, websites, services and mostly backups.

The new server is not longer just a desktop PC, but a 4HE server (without a server rack) with two Xeon Quad-CPUs (i7 920 equivalents) with 24 GB Ram and a really nice Areca Raid controller for 16+4 hard discs of each 1.5 TB (total=24TB) plus another raid with 3 TB for backups. Not all of that space is available since the 20 hard discs are in a Raid60, but we got plenty of free space. We use this server now for pretty much everything, development, file server, all the websites (including this blog), the build server, the content server, many multiplayer servers, lots of services and much much more.

Another picture of the inside of the new server. Please note that the second raid controller is not connected yet and both the SSD and 4 more Hdds are missing. I build most of the cables myself from stuff lying around from other computers. I also added some more fans because the raid controllers apparently do not like heat so much and the server is pretty loud anyway.

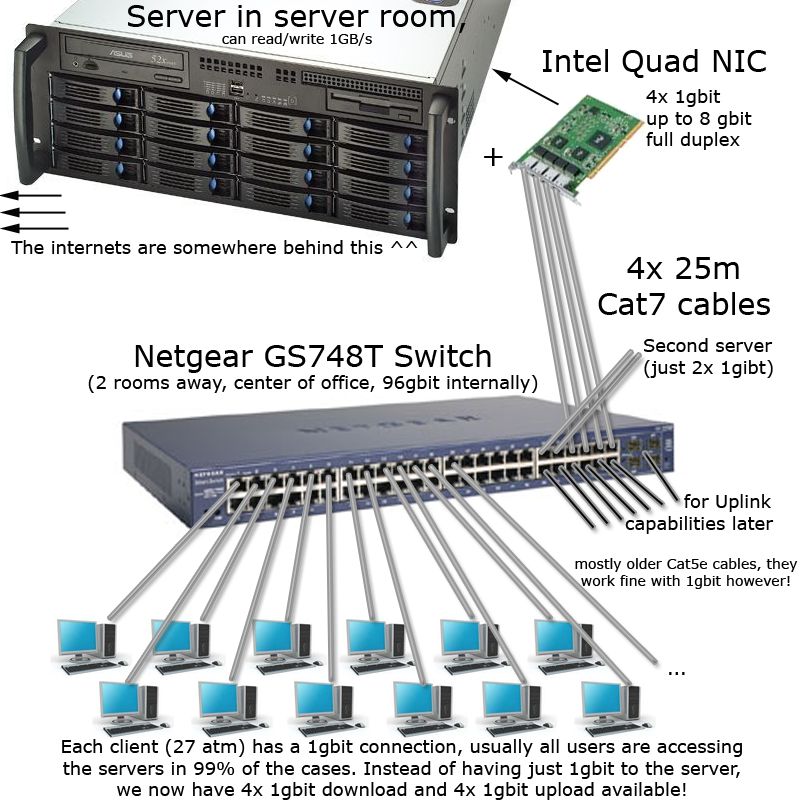

To archive more than 1gbit to and from the server (which is definitely the bottleneck) we are using a different trick, which is called Link Aggregation, also known as trunking, NIC teaming, link bundling, etc. It is usually the easiest way to find support for this technique by searching for the 802.3ad standard, which most switches and multi-network cards support nowadays. The problem is to find a really good switch that can actually handle the workload of many clients plus 4 1gbit cables to the server, which also needs a really good 4x 1gbit network card. One major problem while doing network cards and switch research is the incredible annoying naming and abbreviation all those devices have. Only after a while you find out what LR, SR, LX, TRB, etc. and all the 802 standards mean. After some suggestions from my brother and lots of research (it is not an easy topic if you only play system and network admin once a year ^^) I decided to get a nice Netgear switch with 48 ports (called GS748T) and a powerful Quad Port Server Adapter from Intel. 24 ports plus some other switches we have lying around would also be enough but the only switch I could get easily now was that one and it was only 25% more expensive (it also has a few more cool features). This way I have enough ports to do more trunking in the future (lots of ports will be wasted by connecting switches, servers or even my computer).

Okay, so how does this work now? Let me explain with a little diagram I just drew (the hardware components actually look this way, see above for details):

As you can hopefully see from my amazing drawing skills (now that real graphic artists are blogging on this blog, this is probably not funny anymore) each client is still connected through a 1gbit network card and cable to the new switch. The older switches we had were kinda week and some people could not really enjoy the full speed, either because we cascaded to many 8port switches or because some of them were just overwhelmed by the current load. Now the network is much better and we will do some stress tests this week and since most people are trying to access the server (or one of the internet connections behind the server), much more bandwidth from and to the server can now be provided with help of the Link Aggregation (802.3ad). In fact if 4 people are downloading some huge files from the server simultaneously, each of them can still enjoy up to 120MB/s of data while some other people could write something at the same time. The Raid Controller will probably brake down earlier since it can only provide 1000MB/s when reading or writing sequentially, but this setup should be 10-40 times faster than what we used in the past years. I will blog more next year about the engine, all da cool games and why we need all this server stuff in the near future.

External links: